Three Dimensions of Understanding Human Behavior

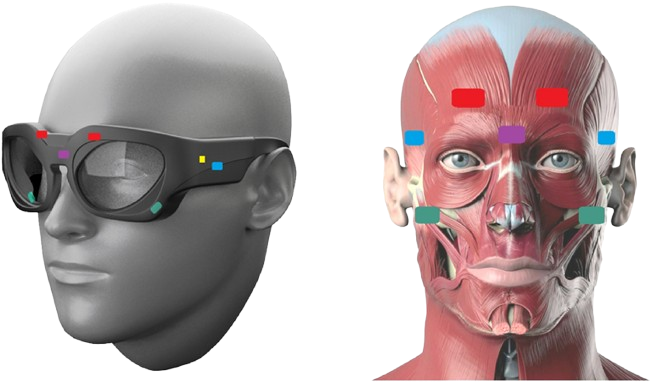

The OCOsense™ smart glasses utilize a powerful combination of six optical OCOsense™ sensors, three proximity sensors, a 9-axis IMU, and a pressure sensor (altimeter)—all discreetly integrated into the frame. This multi-modal approach allows comprehensive facial expression tracking, activity recognition, and eating detection [1, 2].

Revolutionizing Eating Behavior Monitoring

Facial expressions are crucial to understanding emotional responses; however, distinguishing subtle movements such as frowning or smiling in natural settings is complex. By applying machine learning techniques to the OCOsense™ sensor data, our system can identify a range of expressions—including smiles, brow raises, frowns, and squinted eyes—with 97% accuracy, even when the subjects speak or eat [3]. The sensors track micro-movements in the skin across the forehead, cheeks, and around the eyes in real-time, using a non-contact optical method known as “optomyography” (OMG) [1].

Enhancing Activity Recognition

Knowing what someone is doing—whether walking, standing, eating, or performing hygiene tasks—adds critical context to facial expression data. We use smart software (ConvLSTM) that learns from the glasses' motion sensors to recognize daily activities. Testing on both simulated and real-life datasets demonstrated impressive accuracy, with F1 scores of 89% and 82%, respectively [3]. This robustness makes it an ideal tool for long-term, in-home behavioral studies or health monitoring.

Redefining Eating Detection

One of the more novel applications of OCOsense™ is in automatic eating behavior monitoring. Chewing activates distinct facial muscles, especially in the temples and cheeks. Our sensors capture these patterns with remarkable precision, enabling the glasses to distinguish chewing and speaking, smiling, or other movements [2]. Our chewing detection model achieved F1 scores of 91% and 88% in both controlled and real-life environments, respectively [3]. The algorithm improves by analyzing extensive user data, constantly fine-tuning itself to deliver spot-on results.

Key use cases for OCOsense™ smart glasses:

The power of OCOsense™ lies in its ability to unobtrusively monitor facial expressions in natural settings, opening up a world of possibilities:

● Real-time Emotional Analytics

Imagine understanding emotional responses in everyday situations. This is crucial for applications in market research, customer experience analysis, education & training, and even in personal well-being apps [1].

● Healthcare Applications

Monitoring subtle facial cues can be vital in assessing pain, fatigue, stress, or even tracking progress in rehabilitation for neurological conditions. This can lead to more personalized and timely interventions [1].

● Research & Behavioral Studies

For scientists studying human behavior and emotions, OCOsense™ offers a discreet and accurate tool to collect rich data outside of the confines of a lab, making the findings more relevant to everyday life. Additionally, in high-stakes environments such as air traffic control or driving, the glasses can detect signs of distress or distraction, alerting systems to prevent errors, further enhancing behavioral research applications [2].

● Augmented Reality (AR) & Virtual Reality (VR)

Using real-time facial expression data could make AR/VR experiences feel more alive, with avatars reflecting your emotions or apps adjusting to how you're feeling [1].

● Context-Aware Insights

By combining facial expression data with head movement, activity, and speech detection (via the motion sensor, altimeter, and microphones), the glasses can offer deeper insights into a wearer's emotional state tied to their specific activities and environment [1, 2]. This helps interpret expressions within their true context. The glasses also enhance human-machine interaction, particularly for individuals with limited mobility, by enabling control of devices or computers through facial expressions and head movements.

Conclusion

The OCOsense™ smart glasses are a transformative advancement in behavioral analytics, delivering clinical-grade data in real-life contexts. By capturing facial expressions, daily activity, and eating habits with remarkable accuracy, these glasses bring research and care into the spaces where real life unfolds [1, 2]. Their innovative applications—spanning real-time emotional analytics, healthcare monitoring, behavioral research, immersive AR/VR experiences, and context-aware insights—demonstrate Emteq Labs®' commitment to addressing diverse needs.

We are proud to lead this charge, developing solutions that respect the complexity and variability of human behavior. To discover more about the future of emotional analytics and how OCOsense™ is shaping it, we invite you to follow us on social media, or explore our detailed article for a deeper dive into this transformative technology.

References

[1] Archer, J. A., Mavridou, I., Stankoski, S., Broulidakis, M. J., Cleal, A., Walas, P., Fatoorechi, M., Gjoreski, H., & Nduka, C. (2023). OCOsense™ smart glasses for analyzing facial expressions using optomyographic sensors. IEEE Pervasive Computing, 22(3), 53–61. https://doi.org/10.1109/MPRV.2023.3276471

[2] Stankoski, S., Kiprijanovska, I., Gjoreski, M., Panchevski, F., Sazdov, B., Sofronievski, B., Cleal, A., Fatoorechi, M., Nduka, C., & Gjoreski, H. (2024). Controlled and real-life investigation of optical tracking sensors in smart glasses for monitoring eating behaviour using deep learning: Cross-sectional study (Preprint). JMIR mHealth and uHealth, 12, e59469. https://doi.org/10.2196/59469

[3] Gjoreski, H., Sazdov, B., Stankoski, S., Kiprijanovska, I., Panchevski, F., Sofronievski, B., Indovska, E., Cleal, A., Fatoorechi, M., Baert, C., Nduka, C., & Gjoreski, M. (2024). Smartglasses for Behaviour Monitoring: Recognizing Facial Expressions, Daily Activities, and Eating Habits. In Intelligent Environments 2024: Combined Proceedings of Workshops and Demos & Videos Session (pp. 164–169). IOS Press. https://doi.org/10.3233/AISE240031

.png)

.png)